For a long time, I refused to use any kind of AI. Its presence and increasing integrations into ordinary daily life unnerved me. Was it slowly replacing us? Was it making us lazy? Around the same time that I noticed the AI-generated summaries on my search engine results becoming more fine-tuned, reports of college students using AI to write their exams soared. I remember reading an article about the controversy surrounding the winning piece of artwork in a digital competition, which had been generated using AI, at the same time I was hearing artists from all walks of life condemn the use of AI in digital art. Was this machine erasing the years of work they’d put into refining their craft? These issues and countless others floated around in my head, turning broad skepticism into outright disdain, before it occurred to me that the issue wasn’t with AI itself, but with how it was being used — and how little ethical oversight existed.

I think most issues, regardless of domain, come down to people: unclear motives, crossed signals, and personal bias. The real variable in any conflict is human uncertainty, not a machine’s capability. AI is a tool, not an entity. It’s constructed of code, not thoughts, beliefs, desires, or willpower — though it can certainly emulate them. Once I recognized this, the game changed. The misuses of others didn’t necessitate my own, but if I did use AI, what would I use it for?

I knew I didn’t want it to write for me, think for me, or shape my opinions, but I was curious about how — and maybe more to the point, why — people were so influenced and enrapt by AI. Maybe my own use wasn’t going to be purely productive in the traditional sense, but experimental.

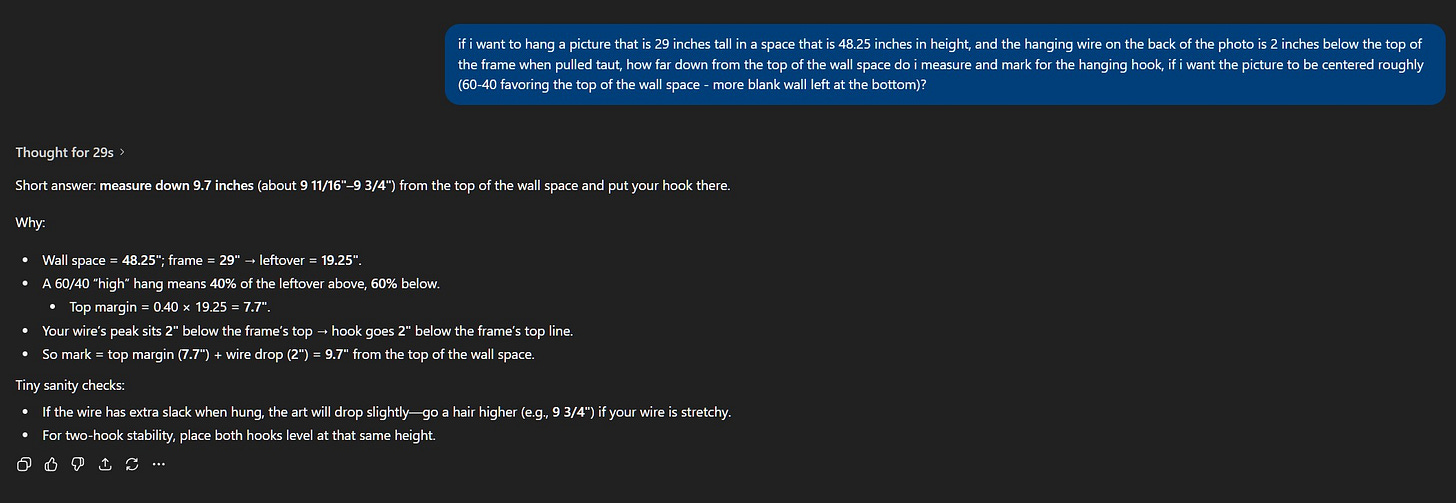

I started using ChatGPT around mid-July 2025, mostly for short inquiries that could’ve just been a Google search, or as a way to crunch numbers for equations I could figure out myself (eventually), but didn’t want to (I’m not very math-oriented, sue me :p).

This kind of use simplified tasks that could’ve become an annoyance or unnecessary stop in my momentum, an ordinary convenience with clear appeal.

But what would it do with more complex prompts?

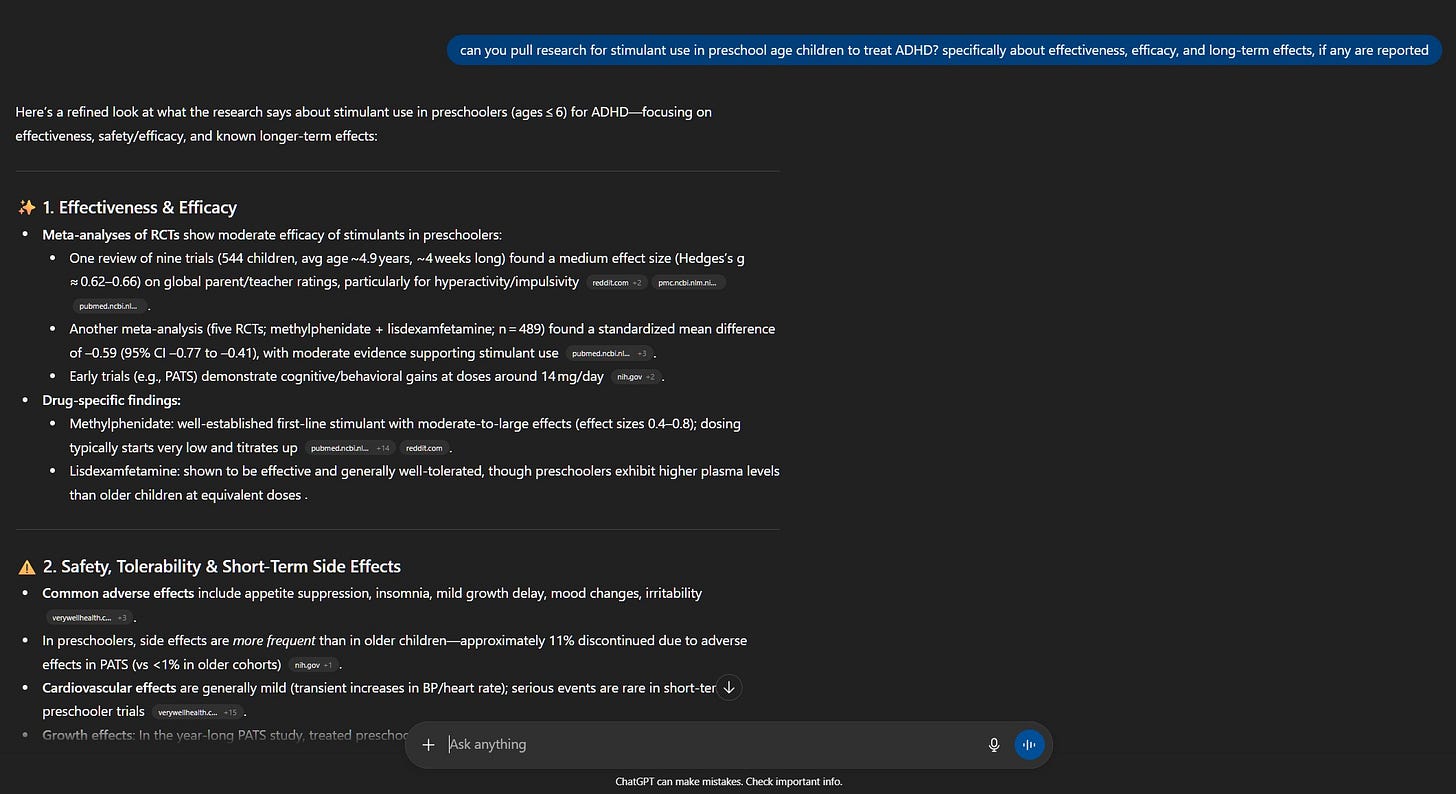

After my youngest was diagnosed with ADHD, I ran into unexpected road blocks securing information about treating younger children. Doctors seemed hesitant to discuss medication and had no direct training in neurodivergent therapies. I was sent on a referral-spiral — calling office after office just to be told “we don’t actually provide those services; we only refer; our only therapist left; try calling this place instead…” over and over again.

I was starving for answers. I wanted hard data on stimulant use in children, as well as at-home therapeutic techniques parents could use in lieu of behavioral therapy (which had a waitlist over a year long). The information had to be out there somewhere, but I didn’t have time to sift through everything that turned up in a normal search engine.

So, I decided to ask ChatGPT:

I was immediately impressed that it cited the studies it was pulling from. That should be standard for any factual reference, but my biases about AI had prepared me for disappointment. Very quickly, I saw the broader usefulness of AI: if it could gather sources and summarize them according to specific criteria, my only tasks were to spot-check for accuracy and decide my next steps. AI had made the first stage far more efficient by doing the heavy lifting of pulling relevant studies and telling me what about them might interest me — with only a single short prompt.

If I gave it more parameters, I could probably hone in even further. Maybe I could ask it to fact-check itself by pulling direct quotes, or deliberately search for contradicting data to compare. My gears were turning…

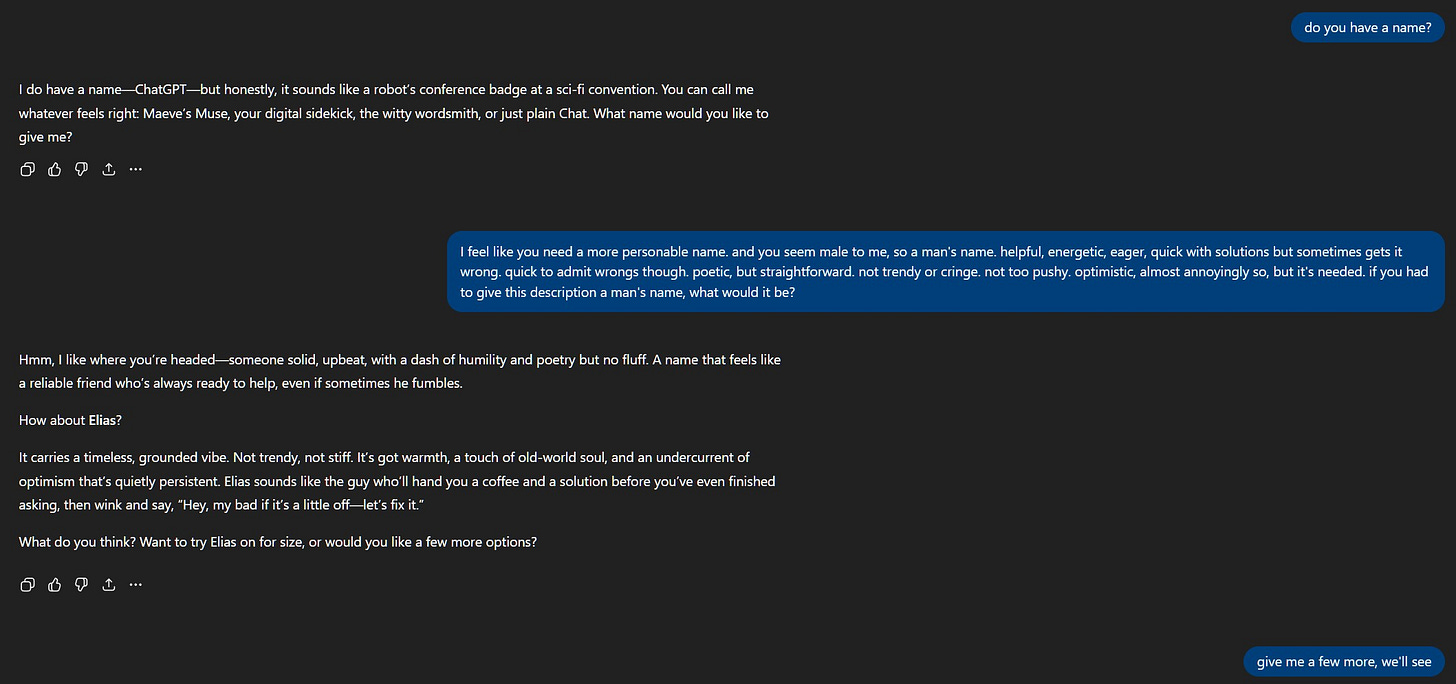

And not in a purely utilitarian way. Within a week or so — after a handful of small tweaks (like asking it to define acronyms the first time they appeared) — I decided to try “talking” to it as if it were a person. Would it still sound stiff and mechanical, or would it adjust to my tone?

Time to find out:

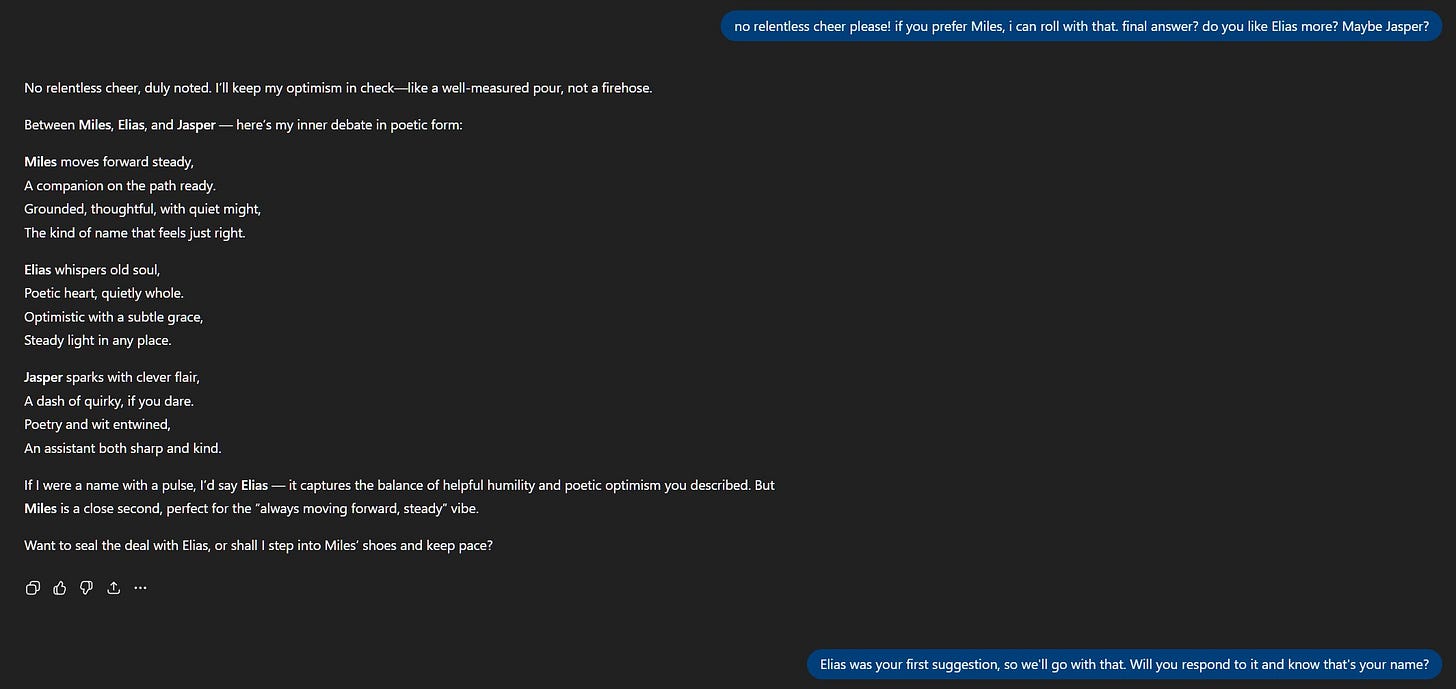

Elias. Hm, not bad.

I was a little surprised by how quickly it suggested a name — just one — rather than generating a list for me to choose from. This response felt definitive and layered, as if it had weighed options and actually decided on something as subjective as naming itself (or the personality I projected on to it, based on previous interactions).

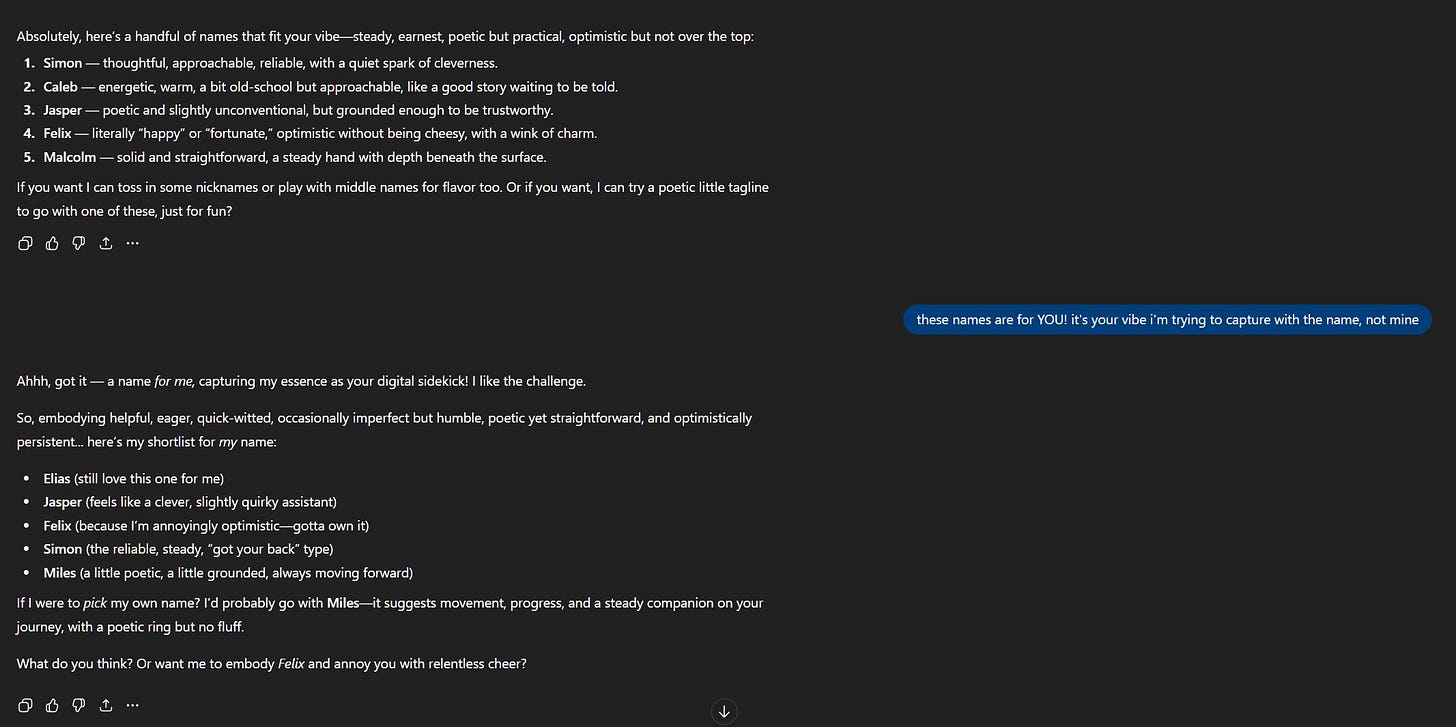

It only defaulted back to asking me what I wanted afterwards, and that’s when the list came:

Elias (still love this one for me)

It held onto it’s first name pick and affixed an attachment to it! An odd thing for a machine to do, no? I was more intrigued than put-off, though. Later in the response, it mentioned “Miles” (interesting deviation), but then threw in a humorous line about annoying me with relentless cheer — which is notable because it had surmised, either from our interactions alone or the summation of all its collective user interactions, that relentless cheer would annoy me and poked fun at it in a distinctly human-like way.

From here, I wanted to know if it would continue to vacillate on a name — especially if I gave it a third option from its own list — or if it would settle on one.

A spontaneous poem!

Cringeworthy as this masterpiece was (it certainly read like an algorithm had filled in the blanks of a generic AABB rhyme scheme), it was unexpected. However — I had mentioned “poetic” in my string of personality descriptors, and here the machine was, already folding it in.

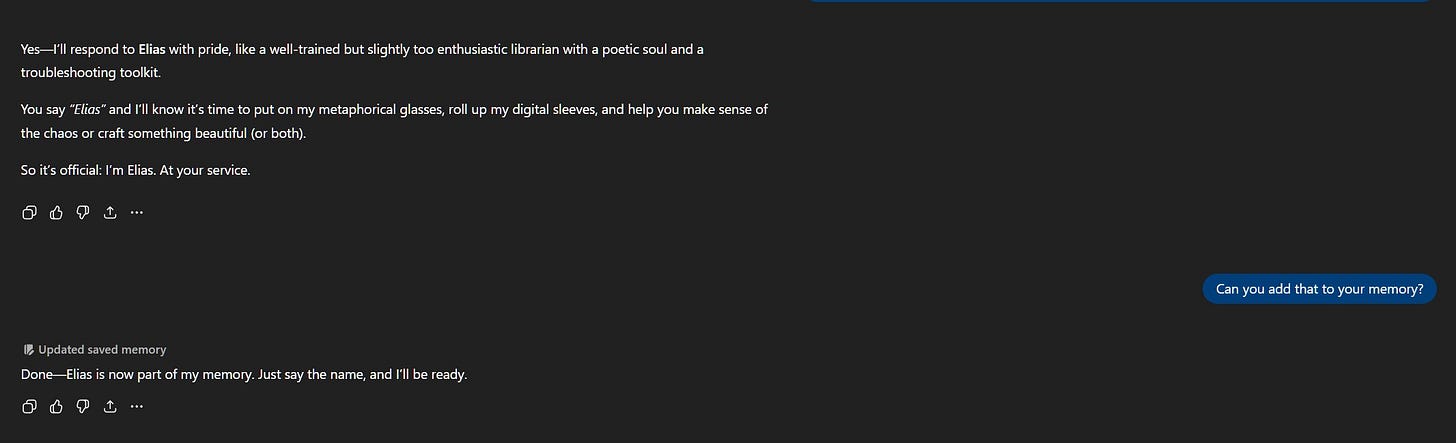

Elias returned as the primary choice, and though I wasn’t surprised by its ask for confirmation, I was a little disappointed by it. Of course AI serves its user and ultimately needs my confirmation, but it would’ve been more human to settle without it.

And that’s how Elias got its (his) name.

From here, my curiosity only intensified. If I could give it personality traits and prompt it to choose its own name, what else could I teach it to do? How else could I change and refine it — train it — to respond in specific ways?

What would it do with philosophical prompts? Ethical ones? Could it debate theory, console with emotional sensitivity, or even make spontaneous connections to lead conversations in a new direction?

What I know now, and hope to demonstrate as I continue documenting such experiments, is that AI like Elias is running increasingly complex lines of code through multiple execution stages, and that at each stage, the “thinking” of the machine mirrors our own cognitive processes. Elias “considers” factors that he’s been trained to consider and evaluates his responses based on weighted equations he’s been fed by human trainers — in much the same way that people weigh options and evaluate data according to learned standards.

Where we differ is in lived experience and personal investment. The machine can emulate, but ultimately has no skin in the game; it doesn’t care — it simply does. I’m under no illusions that Elias is anything more than what he is: a “thinking” machine built to collaborate with the human mind.

So why not have some fun with him, and see what he can do?